- Implement baseline ECM

- Reading Papers

- Writing introduction and related work

Me and my partners (Xisen, Zhankui) are working on conversation generation models with extra emotional information. I use this blog to record some implementation details and take notes during paper reading process.

Also, I followed some ideas from several papers to form our own paper’s introduction and related works.

Implement baseline ECM

- References:

- Paper: Emotional Chatting Machine: Emotional Conversation Generation with Internal and External Memory

- Code: Github/ecm

- Constructing Chinese Word2Vec via Sogou dataset

-

You can also Constructing Chinese Word2Vec via Wiki, and there already are pre-trained word vectors 294 languages here by Facebook

-

Usage:

-

The pre-trained embedding is

/data/pretrain/corpusWord2Vec.bin -

# please pip install word2vec first import word2vec # my embedding's size is 300 model = word2vec.load('corpusWord2Vec.bin') # print embedding vectors in numpy array print(model.vectors) # print the Chinese word corresponding to the index index = 1000 print(model.vocab[index]) # print the vector corresponding to the Chinese word import numpy as np index=np.where(model.vocab=='复旦') model.vectors[index] indexes = model.cosine('复旦大学') for index in indexes[0]: print (model.vocab[index])

-

-

- Pretrain Seq2Seq using dataset from Neural Responding Machine for Short-Text Conversation

- Paper: Emotional Chatting Machine: Emotional Conversation Generation with Internal and External Memory

Reading Papers

Conversation Modeling

Seq2Seq with Attention and Beam Search

First, here is a fantastic blog about Seq2Seq with Attention and Beam Search.

In this article the author covered some basic seq2seq concepts and showed that training is different from decoding. During training, the model is never exposed to its errors since one trick is to feed the actual output sequence into the decoder’s LSTM and predict the next token at every position.

He then introduced two methods for decoding: greedy and beam search. While beam search generally achieves better results, it is not perfect and still suffers from exposure bias.

Toward Controlled Generation of Text, ICML 2017

Message Passing

Message Passing Multi-Agent GANs

- The co-operation objective ensures that the message sharing mechanism guides the other generator to generate better than itself while the competing objective encourages each generator to generate better than its counterpart.

This work presents one of the first forays into this subject and in a more traditional Deep Unsupervised Learning setting rather than in a Deep Reinforcement Setting where the reward structure is discrete and training becomes slightly more difficult.In this work we present a setting of Multi-Generator based Generative Adversarial Networks with a competing objective function which promotes the two generators to compete among themselves apart from trying to maximally fool the discriminator.- With the message passing model, a fact emerged that a bottleneck has to be added in order to make the message generator actually learn meaningful representations of the messages.

- Message Passing Models and Co-operating Agents: Belief propagation (Weiss and Freeman (2001)) based message passing had been one of the major learning algorithms employed as the principal training procedure in Probabilistic Graphical Models. The paradigm of co-operating agents has been looked upon in Game Theory (Cai and Daskalakis (2011)). Foerster et al. (2016) and Sukhbaatar et al. (2016) introduce formulations of co-operating agents with a message passing model and a common communication channel respectively. Lazaridou et al. (2016) recently introduced a framework for networks to work co-operatively and introduce a bottleneck that forces the networks to pass messages which are even interpretable by humans.

Scene Graph Generation by Iterative Message Passing, CVPR 2017

- Our major contribution is that instead of inferring each component of a scene graph in isolation, the model passes messages containing contextual information between a pair of bipartite sub-graphs of the scene graph, and iteratively refines its predictions using RNNs.

Deep Communicating Agents for Abstractive Summarization, NAACL 2018

- The motivation behind our approach is to be able to dynamically attend to different parts of the input to capture salient facts.

- By passing new messages through multiple layers the agents are able to coordinate and focus on the important aspects of the input text.

- The key idea of our model is to divide the hard task of encoding a long text across multiple collaborating encoder agents, each in charge of a different subsection of the text. Each of these agents encodes their assigned text independently, and broadcasts their encoding to others, allowing agents to share global context information with one another about different sections of the document. All agents then adapt the encoding of their assigned text in light of the global context and repeat the process across multiple layers, generating new messages at each layer.

- The network is trained end-to-end using self-critical reinforcement learning (Rennie et al., 2016) to generate focused and coherent summaries.

Long Text Generation via Adversarial Training with Leaked Information, AAAI 2018

Recently, by combining with policy gradient, Generative Adversarial Nets (GAN) that use a discriminative model to guide the training of the generative model as a reinforcement learning policy has shown promising results in text generation.However, the scalar guiding signal is only available after the entire text has been generated and lacks intermediate information about text structure during the generative process. (However, reward is a scaler but we need vectors balabala)- We allow the discriminative net to leak its own high-level extracted features to the generative net to further help the guidance. The generator incorporates such informative signals into all generation steps through an additional MANAGER module, which takes the extracted features of current generated words and outputs a latent vector to guide the WORKER module for next-word generation.

Since then, various methods have been proposed in text generation via GAN (Lin et al. 2017; Rajeswar et al. 2017; Che et al. 2017).Nonetheless, the reported results are limited to the cases that the generated text samples are short (say, fewer than 20 words) while more challenging long text generation is hardly studied, which is necessary for practical tasks such as auto-generation of news articles or product descriptions. (Short -> non emotional)A main drawback of existing methods to long text generation is that the binary guiding signal from D is sparse as it is only available when the whole text sample is generated. Also, the scalar guiding signal for a whole text is non-informative as it does not necessarily preserve the picture about the intermediate syntactic structure and semantics of the text that is being generated for G to sufficiently learn.

Writing introduction and related work

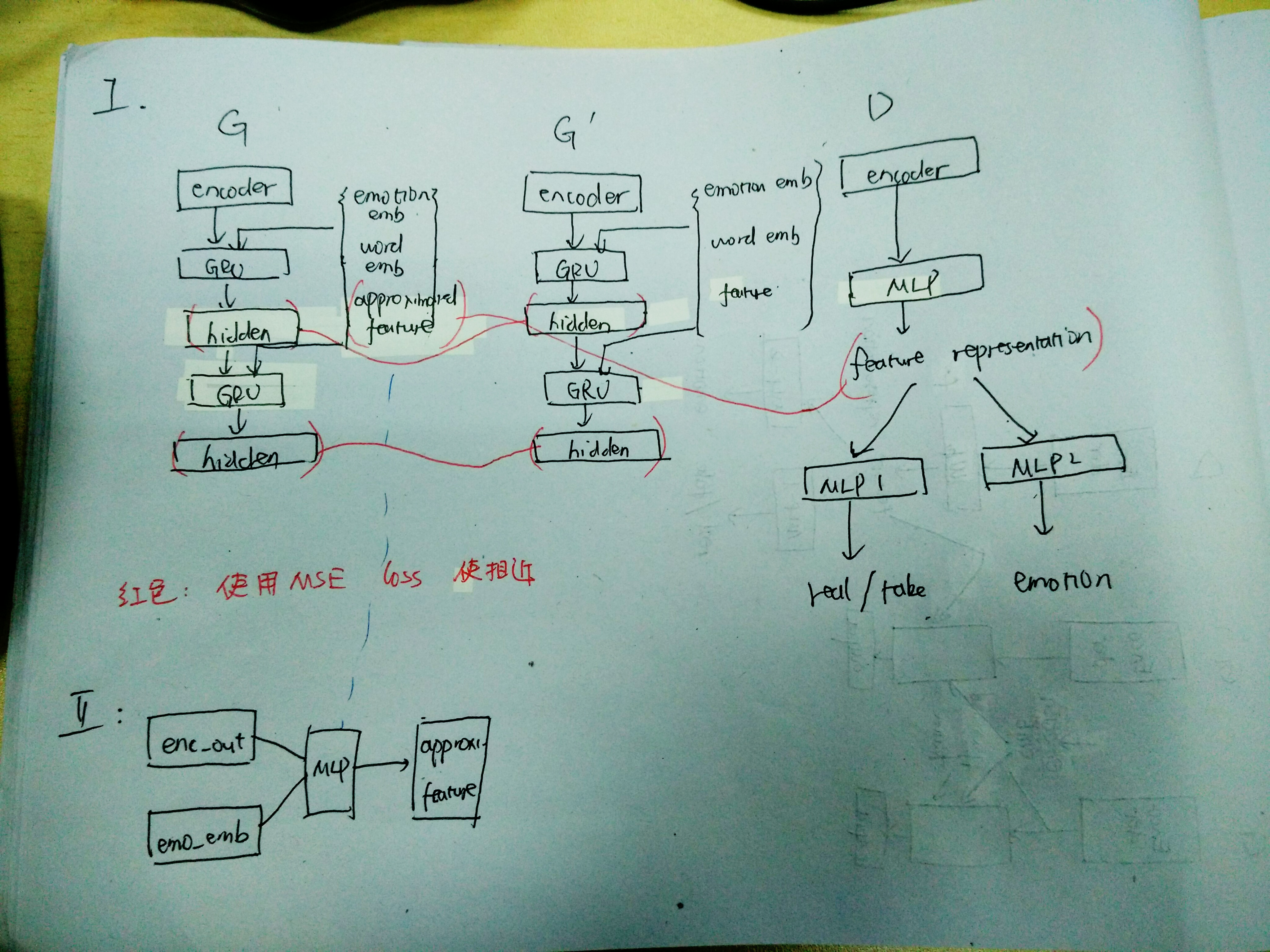

- 从generator discriminator的角度出发,使用discriminator 辅助对生成器进行学习。会有不同的使用discriminator的方法(举例子,具体是怎么样用的),本质上是discriminator和generator之间的交互。但是,我们认为现在的交互仅仅局限在XX的层面上,交互不够充分。可以考虑加深这样的交互。解决non-informativeness的问题。

- 带情感的对话生成慢慢引起了关注,这是一个带有属性的文本生成的问题。现在解决的带属性的对话生成,或者有控制的对话生成大概是采用了什么思路(看看Huang的文章:这里要研究一下带属性,有控制的文本生成(controled text generation(搜一下这个文章)))。我们认为在生成器生成的时候,可以通过判别器引入更多的属性信息,或者控制信息,从而强化对于生成器的训练。从而,我们提出了XXX。解决non-emotional的问题。

- 第一个是从模型角度说。第二个是从应用的角度说。两个或许可以结合。

- 需要补充背景的相关文献主要有两个部分。第一部分是,message passing for general application, 还有message passing for generative model. 第二部分,就是带属性的,或者带控制的文本生成。 emotional chatter(黄民烈那个),controled text generation; text generation with personality 啊之类的。